AI Risk Management for Salesforce: Trust Layer 2026 Guide

The use of AI by businesses is accelerating and introduces new challenges. Firms need to strike a balance between innovation and protection. Their application of AI should be done in a manner that implicates privacy, does not violate laws, and limits operational risk. This is the reason why AI risk management has become a necessity for all enterprises planning to upgrade their technology. Salesforce is responding to these issues by introducing the Einstein Trust Layer, which introduces secure and controlled AI to the heart of the CRM architecture.

Generative AI is of great value, but there are issues of exposure of data, model bias, and regulatory pressure. Such anxieties are intensified when it comes to teams that are planning Salesforce CRM implementation in 2026, where there will be a higher compliance requirement. Enterprise-grade security, no data retention, and automated governance of all AI interactions are bridged by the Einstein Trust Layer.

Why AI Risk Management Matters in 2026

All companies that apply AI have to deal with three important tasks. They should not ruin customer information, violate international laws, and avoid discriminate and abuse. Bad governance is likely to cause inaccurate outputs and significant compliance issues. That is why AI risk management holds the central position in any AI project. It assists businesses in dealing with uncertainty, encourages the responsible use of generative AI throughout workflows.

Regulators around the globe are coming up with novel AI regulations. Business organizations should demonstrate that their systems are secure, open, and traceable. There are no audit trails or control layers in AI systems, which poses a long-term exposure to business leaders. Einstein Trust Layer is an organized platform, as it provides a way to conduct the use of AI in accordance with ethical and regulatory standards.

Inside the Salesforce Einstein Trust Layer

Salesforce created the Einstein Trust Layer to ensure that every AI interaction is secure, private, and compliant. It acts as a protective layer between company data and large language models. The Trust Layer follows a guided sequence to protect data and reduce risk before any AI request is processed.

Zero Data Retention

Third-party models never store or reuse customer data. This feature supports privacy laws and improves AI risk management. Zero data retention is also a core part of trusted generative AI security in 2026.

Input and Output Data Masking

The Trust Layer masks personal or confidential data before it reaches the model. This helps prevent exposure and supports regulatory compliance AI standards. Masking reduces the chances of sensitive data leaking through prompts or AI-generated outputs.

Secure Grounding with Business Data

AI models produce better results when grounded with clean and trusted data. Salesforce retrieves relevant information from its CRM while keeping everything inside secure boundaries. This improves accuracy and supports ethical decision-making across customer-facing workflows.

Audit Trails for Compliance

Every prompt and every output is logged. These logs help compliance teams track AI behavior and ensure full transparency. It strengthens ethical AI governance and simplifies audits across departments.

Support for Multiple LLMs

Salesforce supports open model flexibility. Companies can use Salesforce-provided models or connect external LLMs. Even when external models are used, the Trust Layer maintains the same strict governance. This approach ensures flexibility without compromising AI risk management.

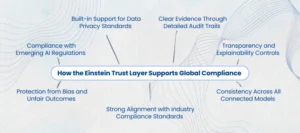

How the Einstein Trust Layer Supports Global Compliance

Companies now operate in a world shaped by fast-moving AI regulations. They must follow strict rules for data privacy, auditability, fairness, and transparency. These rules apply across industries like finance, healthcare, public services, and retail. The Salesforce AI risk management system helps enterprises stay compliant by building governance into every AI request.

The Trust Layer follows a lifecycle approach to AI risk management and regulatory compliance. It scans inputs, protects personal data, checks grounding sources, and records decision paths. These capabilities help teams meet standards introduced by major global frameworks and support strong ethical AI governance across Salesforce environments.

Compliance with Emerging AI Regulations

Many countries are introducing new AI laws. They require safe, transparent, and documentable AI usage. The Salesforce Einstein trust layer supports these rules through strong guardrails and automated checks. This helps reduce compliance gaps and strengthens cross-border adoption for companies focused on AI compliance and risk management Salesforce tools.

Built-in Support for Data Privacy Standards

The Trust Layer protects personal data before it reaches any model. Features like masking, encryption, and zero data retention AI help companies follow GDPR, DPDP, CCPA, and other global privacy laws. These protections reduce exposure and keep sensitive information inside enterprise boundaries, supporting advanced generative AI security practices.

Clear Evidence Through Detailed Audit Trails

Regulators want proof that companies use AI responsibly. They expect detailed logs, version history, and model output records. The Trust Layer captures every interaction with full context. These audit trails support investigations, simplify documentation, and reduce uncertainty in AI risk management workflows.

Transparency and Explainability Controls

Enterprises need to understand how AI reaches each result. They also need documentation for reviewers and auditors. The Trust Layer provides visibility across data grounding, prompt behavior, and model outputs. This simplifies explainability and supports ethical AI governance in high-risk enterprise workflows.

Protection from Bias and Unfair Outcomes

Bias in AI systems can create compliance issues and reputational harm. The Trust Layer reduces bias by grounding generative models with accurate business data. This prevents misleading or discriminatory outcomes and supports the fairness standards required in regulatory compliance AI frameworks.

Consistency Across All Connected Models

Businesses often use multiple LLMs across different teams. This increases governance complexity. AI risk management by Salesforce creates a unified policy boundary across every model. It ensures consistent rules, logs, and protections even when enterprises use third-party LLMs. This stability supports long-term AI risk management across Salesforce and connected systems.

Strong Alignment with Industry Compliance Standards

Industries with strict requirements need predictable and secure AI behavior. The Trust Layer supports compliance needs from frameworks like ISO 42001, SOC 2, HIPAA, and PCI DSS. These controls help enterprises adopt secure AI within Salesforce CRM implementation 2026 strategies while leveraging strong Salesforce development services for customization.

Why Ethical AI Governance Is No Longer Optional

The concept of AI risk management prevents bias, unintentional harm, and loss of customer trust to businesses. It has become a leadership task. Ethical practices will bring about risk reduction and enhance long-term value with companies. The structure of Salesforce has ensured that governance is not a secondary consideration.

The Einstein Trust Layer guarantees that all of its outputs are accountable and meet the enterprise regulations. This is necessary for organizations that desire to employ AI on a large scale without risking safety. Ethical frameworks are also useful to allow teams to prepare for audits, comply with internal policies, and make decisions.

What This Means for Salesforce CRM Implementation in 2026

The 2026 Salesforce ecosystem will be shaped by secure AI and strong governance. Companies planning new implementations must integrate governance into every stage of their CRM strategy. The Einstein Trust Layer will guide how teams use AI across sales, service, commerce, and marketing.

Businesses will expect controlled environments, transparent logs, and zero data retention workflows. They will also require configurations aligned with AI governance principles. This makes Salesforce development services essential for creating secure, scalable, and compliant implementations.

Governance is no longer a technical feature. It is a competitive advantage for organizations that want to build trust-driven AI workflows.

The Role of Generative AI Security

Generative AI brings efficiency, automation, and speed. However, unsecured models can expose sensitive information or create biased decisions. Generative AI security ensures that companies can innovate without risk. The Einstein Trust Layer enforces strict oversight, ensuring that every output is aligned with enterprise standards.

Security teams gain control through encryption, policy-based access, and behavior tracking. This reduces the chance of misuse and supports long-term AI risk management strategies.

Benefits for Enterprises Using Salesforce

Companies that implement Salesforce and Salesforce Einstein Trust Layer have a number of long-term strategic benefits. These features make AI risk management stronger, boost generative AI security, and make safe enterprise-wide innovation possible.

Reduced Operational and Compliance Risk

The Trust Layer embeds strict governance controls into every AI interaction. This reduces exposure to regulatory violations, privacy issues, and model failures. With consistent risk checks across all workflows, enterprises maintain stronger AI risk management practices without slowing innovation.

Safe Implementation of Modern AI Features

The use of advanced generative AI can be implemented in businesses without affecting the security of their data. Such guardrails as zero data retention, encryption, grounding, and access controls enable the deployment of AI safely, particularly in high-risk settings, by the teams. This supports Generative AI security across the entire Salesforce AI risk management ecosystem.

Future-Ready Workflows Aligned with Global Regulations

The Einstein Trust Layer assists groups in creating workflows that are in accordance with future AI regulations. This becomes particularly significant with the new rules on transparency, fairness, and accountability that governments implement. By incorporating compliance-related features, the companies remain prepared in regards to regulatory compliance AI requirements.

Support for Advanced Governance Frameworks

Companies that are on the verge of becoming more stringent in their governance guidelines enjoy automated records, monitoring, and traceability. The Trust Layer eases the audit and is compatible with standards such as ISO 42001, SOC 2, HIPAA, and industry-specific policies. This enhances the ethical AI regulation in critical functioning operations.

Scalable and Secure AI Adoption

As businesses expand AI usage, the Trust Layer ensures consistent guardrails across all departments and large language models. This helps enterprises scale responsibly while maintaining strong compliance and AI risk management. It also accelerates Salesforce CRM implementation 2026 planning by ensuring safe model usage from day one.

Industry-Wide Relevance

Such benefits favor other financial, health, retail, real estate, state services, and manufacturing industries. The business leaders are assured that the AI-powered working processes are secured by a reliable security and compliance framework.

Conclusion

AI is now central to modern business, but responsible AI is the only scalable path forward. Enterprises must adopt strong AI risk management practices to protect data and follow compliance rules. Salesforce’s Einstein Trust Layer provides a secure foundation for ethical and governed AI. It brings zero data retention, data masking, transparency, and strong compliance features under one unified architecture.

For companies preparing for Salesforce CRM implementation 2026, this is the ideal moment to adopt a governance-first approach. At AnavClouds Software Solutions, and through AnavClouds Analytics.ai, we help organizations build responsible, secure, and compliant AI systems inside Salesforce. Our teams deliver Salesforce development services that align governance with every stage of AI transformation. With the right frameworks, businesses can innovate confidently and meet all future AI regulations.